Reveleer Quality Data Abstraction

Reveleer Platform for Quality Measures Abstraction is an AI-powered tool that supports health plans in tracking and closing care gaps for quality improvement. The platform uses an AI engine that enables clinical abstractors to review patient records efficiently, detect key diagnoses, identify missing or incomplete quality measures, and document outcomes to support accurate HEDIS reporting.

This project focused on improving the abstraction experience — streamlining workflows, reducing manual effort, and ensuring abstractors can confidently and accurately complete their reviews within tight seasonal deadlines.

Role

Product Designer

Timeline

Feb 2025 - June 2025

Team

1 Director of Experience

1 Product Designer

1 Illustration Designer

Stakeholder

Product Managers (Clinical / Ai )

Engineers (AI / Front-end / QA)

Clinical Innovation Lead

Customer success manager

Tool

Figma

Jira

Process

IMPACT

+20%

Increased Coding Productivity

Productivity increased from 5.5 tasks/hour to 6.6 tasks/hour

1.2M

High-Volume Processing

1.2M patient charts processed in 4 months with AI (NLP), accelerating review efficiency

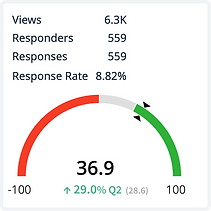

+29%

NPS growth in 6 month

Customer satisfaction improved significantly post-redesign, with NPS increasing 29% from Q2 to Q4.

.png)

What is Quality Improvement Abstraction?

In healthcare, quality improvement abstraction is the process of extracting key clinical data from patient records to evaluate and improve care quality. Abstractors review charts to capture specific quality measures—such as cancer screenings or blood pressure control—that reflect how well providers are meeting clinical standards.

In my project at Reveleer, I designed the Quality Data Entry platform used by both internal abstractors and health plan customers. This abstraction work supports national quality programs (e.g., HEDIS, Star Ratings) and payer reporting, which in turn influence compliance, care delivery, and incentive-based reimbursement.

By integrating AI capabilities directly into the abstraction workflow, the platform now helps abstractors:

-

Automatically detect and surface relevant clinical evidence from unstructured medical records using NLP and OCR

-

Pre-populate quality measure fields with AI-suggested values to reduce repetitive data entry

-

Highlight evidence, confidence and source context, improving trust and decision-making

-

Enable human validation and override, ensuring compliance in high-risk, regulated environments

A reliable and efficient platform is critical—inaccurate or delayed data entry can impact patient outcomes, financial performance, and regulatory standing.

We use AI-powered abstraction to transform raw medical records into CMS-ready data—helping health plans improve reimbursement accuracy, accelerate compliance, and reduce manual effort.

Challenge

The core challenge of this project was designing a single abstraction platform that serves two very different user groups — internal Reveleer abstractors and external platform customers — each with unique workflows, goals, and expectations.

To meet this challenge, we identified two primary user types:

Visualized their commonalities and differences to guide design priorities.

Reveleer Abstractors

Customer Abstractors

Salaried staff

Productivity boost

Require manager coordination

Open to AI support

Supports AI growth

High time pressure

Serve varied client workflows

Task-based compensation

Cost sensitive

Custom workflows

Stable work queues

Prefer manual data entry

Cautious with AI

Dynamic work queues

Accuracy & compliance

Clean UI

NLP capture support

Fast navigation

Ideation

How might we support two user groups with different workflows, goals, and preferences within one platform?

User Journey Maps: Two Perspectives

I mapped the journeys of internal and customer abstractors to uncover key differences and shared needs—insights that directly shaped my design decisions.

Opportunity

Key Design Opportunities

1. Flexible Workflows for Different Needs

2. Smarter, Controllable AI Assistance

3. Clearer Context & Compliance Visibility

4. Streamlined Data Entry Experience

5. Built-in Collaboration & Feedback Tools

Core Workflows

An AI-assisted workflow that detects clinical evidence, surfaces key diagnoses and suggestions, reduces manual effort, and enables fast, accurate submission with human oversight.

Solution

Note:

All patient information in this mockup is fictitious and used solely for demonstration, in compliance with HIPAA privacy standards.

Design

Final

From Priority to Action

A typical morning for our abstractors:

Dashboard → Confirm priorities → Filter tasks → Start work

Clear Priority Alignment via Built-in Messaging tool

Helps ensure the most urgent or relevant work gets handled first, with fewer back-and-forths.

Streamlined Task Selection for Faster Starts

A clean search interface with multi-selection functions allows users to filter and launch the right tasks quickly.

Smart AI Validation

By combining visual hierarchy with interaction flexibility, this design supports both high-speed workflows and high-accuracy reviews. It empowers different user types while also contributing structured feedback to train and improve the AI engine.

Blue AI highlights guide attention to key values instantly—reducing eye strain and search time

One-click validation allows users to quickly confirm AI-captured data

Easily decline incorrect inputs

Flexibility to skip AI suggestions and enter values manually when needed

Intentional AI–User Feedback Loop

Annotations allow abstractors to leave contextual notes tied to specific data points—supporting teammate clarity, audit traceability, and continuous AI improvement.

This design places a strong emphasis on intentional AI–user interaction.

Instead of treating AI as a passive background tool, we designed a clear, continuous feedback loop that allows users to validate, correct, and guide AI outputs—helping the system become more accurate and reliable over time.

Approve or decline AI-suggested values

Confidence scores for AI outputs

Direct user override and modification

Flexible Highlighting Tool

The new highlight tool supports custom colors, styles, and in-line annotations—enabling teams to define visual cues, track authors, and flag critical info. Users praised its clarity and flexibility in supporting diverse workflows and smoother collaboration.

Performance, Work Queue and Help Center

To support abstractors in their day-to-day work, we designed a system that combines clear task ownership, performance visibility, and AI-powered in-app support.

Performance: Motivation through visibility

Work Queue: Clear daily focus

Help Center: AI-powered, in context

Outcomes

Usability Testing Summary

I conducted moderated usability testing with 8 internal and 6 external users across two abstraction workflows, covering 12 key tasks. The redesigned experience received an average satisfaction rating of 4.62/5.

Takeaways

This project challenged me to design for two distinct user groups with different workflows. Through focused interviews and usability testing, I uncovered their unique needs and delivered a flexible, unified solution.

Design for balance

Design for balance. Support diverse users with flexibility—while avoiding feature bloat that slows learning and adds friction.

Start with MVP

Prioritizing core interactions over feature creep kept the UI focused and impactful.

Collaborate early

Partnering with PMs and engineers from the start ensured feasibility and efficiency.

Simplify with intent

Removing clutter is harder than adding features—but essential for usability and adoption.